I’m not even going to pretend this isn’t cool as hell: I got a hole thru on my tool for model-based testing, the MBT Workbench. I can now automatically generate a test-suite from a service description and do a comprehensive invocation.

Last time, I was talking about how we set up tests in the MBT Workbench, closely following the meta-model. I mentioned that the immediate todo was to implement a viewer for output mappings and to actually generate input objects based on input mappings. Well, I did both, but let’s focus on the latter part because that’s hella more cool. We’re still dealing with the original insurance example: car insurances are offered based on gender and age, and not to males under 25.

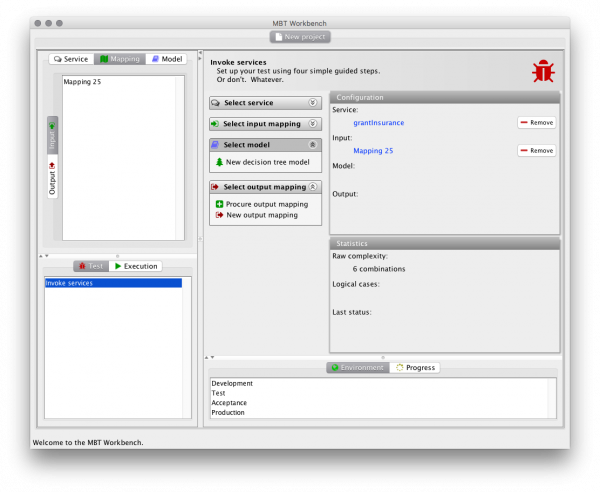

Now, we can set up out test; it’s not much different from last time (I implemented renaming, so all objects have non-generic names):

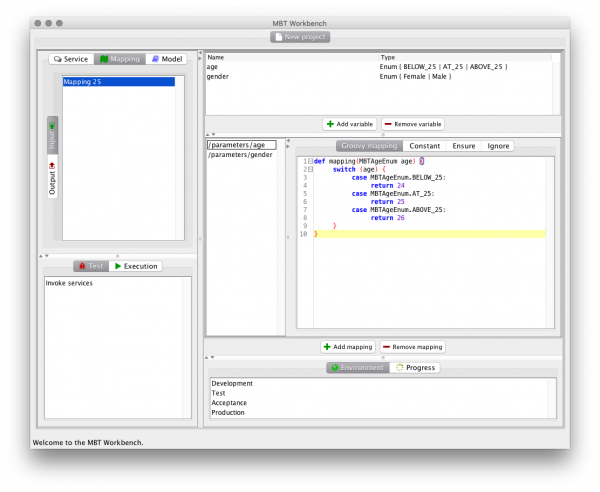

We use the same auto-generated mapping (except I weeded out a minor error in the generated Groovy code):

What happens is that we enumerate all combinations of input parameters (such as (Female, BELOW_25) and (Male, ABOVE_25)). For each combination, we then create a request object using reflection. We iterate over all mappings and evaluate the Groovy mapping using the appropriate parameters. The appropriate parameters are found by asking Groovy to parse the mapping into an AST, which we then inspect. The resulting value is set on the request object using JXPath and a bit of automatic type conversion. This whole process then yields a domain object representing the SOAP request. The SOAP request is simply fired off using a CXF dynamic client created from the original WSDL.

And that’s it. The translation procedure is immensely complex and cuts a heel here and a toe there, but it actually works. How do I know that? Well, I tested it!

To test it, I wanted to just set up a dummy service. I originally wanted to use Parasoft Virtualize CE, mostly because I wanted to get to know it. I even requested a license, but it seems something is fishy with their mail server, because I never received a response from them (my mail host has a lot of provisions for blocking spam, so that might be it). I even tried with another mail address, but they have some manual filtering process, so I never got a response there either.

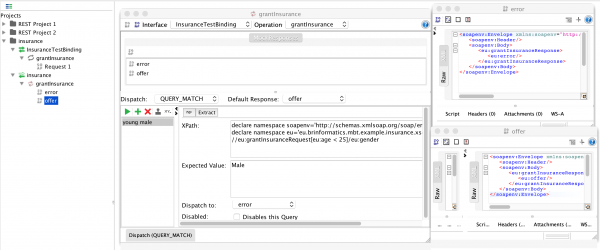

In the end, I said fuck it and just went with what I know: SoapUI MockServices. I set up a quick project based on my WSDL, and created an “ok” (offer) and an “error” response. These I ties to a mock-service, and used a bit of XPath to distinguish between the cases:

(If you wonder why the XPath expression is so weird, it’s because I only have one expression to match two values, and I can only match on equality.) The mock service returns an offer unless the request contains an age below 25 and a gender = Male, in which case an error is returned.

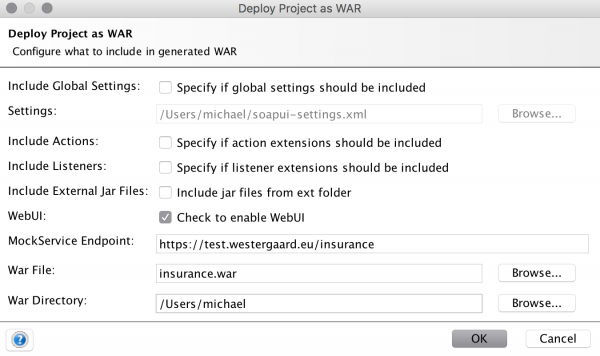

I used SoapUI to generate a WAR file from the mock-service:

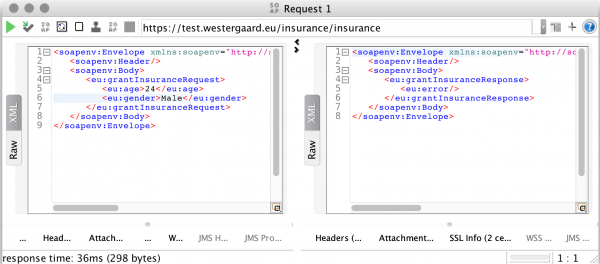

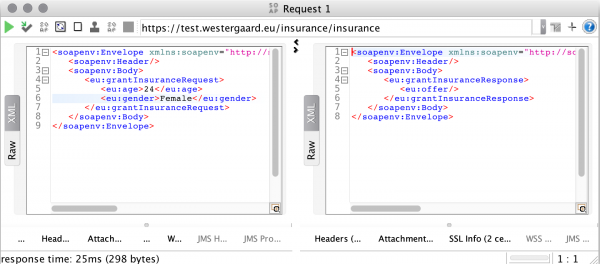

This WAR file, I installed on my regular test application container, and I sent off a couple test requests the old-fashioned way using SoapUI:

It seems to work, but how can you be certain with such sporadic ad-hoc testing?

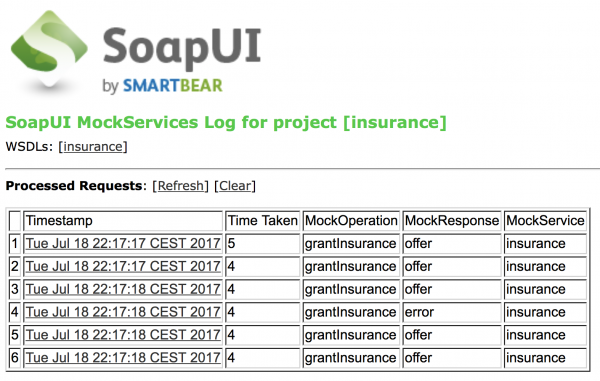

Well, MBT Workbench to the rescue. After executing the above test (really, I am using the “Procure output mapping” action), I get the below result:

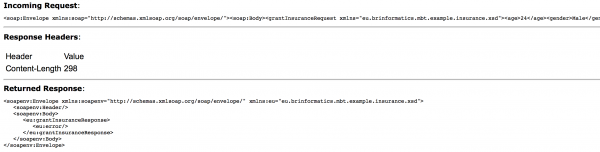

We see that the stub has received the 6 requests MBT Workbench indicates it will generate. We see that 5 of the requests result in an offer (the MockResponse column) and one in an error.

We inspect details of request and response 4:

It’s a bit small, but here’s the request that was generated almost entirely automatically:

[xml] <soap:Envelope xmlns:soap=”http://schemas.xmlsoap.org/soap/envelope/”><soap:Body>

<grantInsuranceRequest xmlns=”eu.brinformatics.mbt.example.insurance.xsd”>

<age>24</age>

<gender>Male</gender>

</grantInsuranceRequest>

</soap:Body>

</soap:Envelope>

[/xml]

We see the request indeed corresponds to the first one we did manually, which is intended to yield an error.

If you don’t think this is entirely wicked-cool, you are literally Hitler and boo to you for the Holocaust! The coolness is probably due to so many little pieces I’ve worked on for almost a month in separation finally come together as originally designed, so I might be biased.

Now, the next step is to use the response to assist users in generating output mappings. Here’s a sneak preview of the viewer/editor for output mappings. There’s nothing in there, because it is still work-in-progress:

Once the output mappings are ready, I can finally get to the interesting part, the generation of models and logical test-cases.