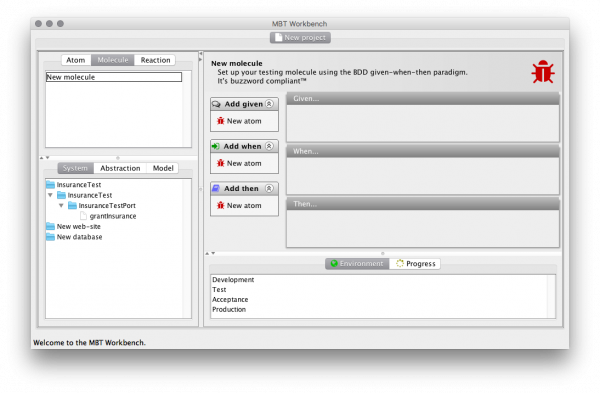

I've been quiet for a while ((Actually, I originally wrote this post in January, but after writing it, it took me another two months to get around to editing it and adding pictures.)). Studying burns sufficient mental energy that I have little left to also work on coding projects in my spare time. That doesn't mean I haven't been thinking and have things in the pipeline – I'm an ideas guy (support my Patreon and Kickstarter) – and in this post, I want to show some of the changes I have made to make the MBT Workbench support testing web applications and inspecting databases.

Generalizing Concepts

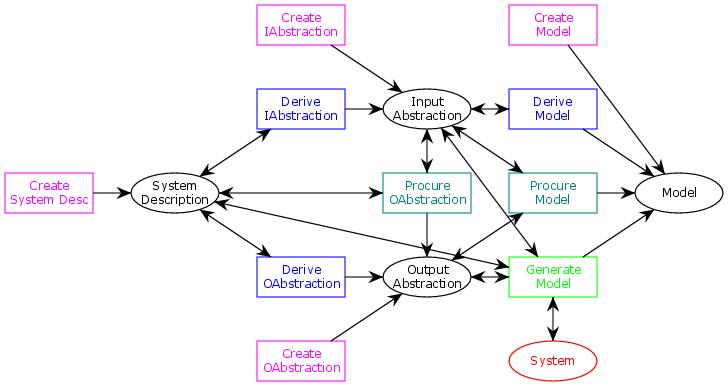

In the meta-model for the MBT workbench, we used to have central concepts including services or service definitions, input and output mappings, and models, combined to produce tests that can be run and lead to an execution result. These names refer to services (which is a bit web-service specific), mappings (which make sense mathematically by might have incorrect connotations for others) and tests (which is undesirable because really they are just test steps).

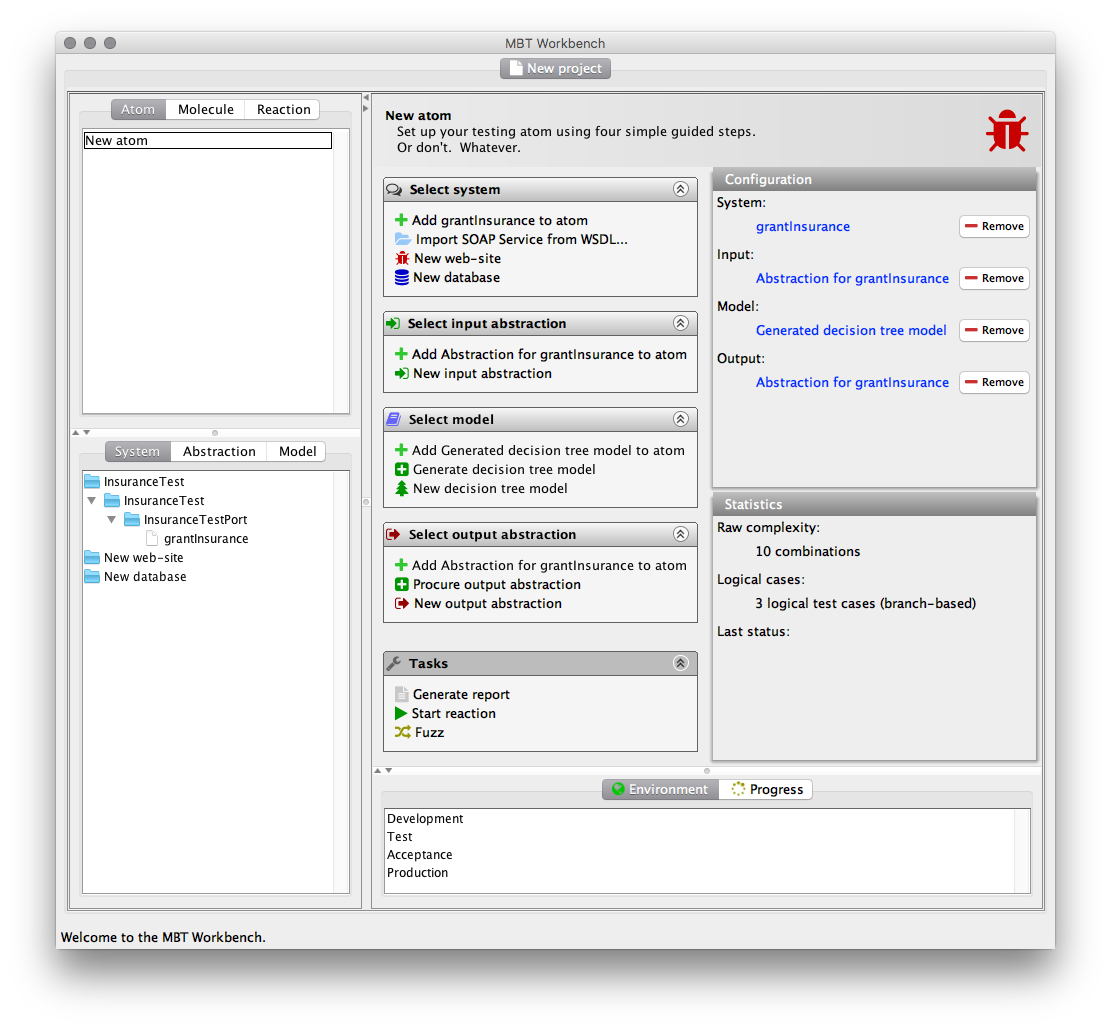

The first two types of artifacts I have simply renamed to systems and abstractions. Systems are more general than service and also fit in with standard testing vocabulary. Abstractions instead of mappings capture what the mappings do: they abstract the irrelevant details from the system domain away so they better match the model domain.

The naming for tests is a bit unfortunate; when dealing with (simple) service testing, it is not a problem to test each call in isolation. It was always my intention to set up larger scenarios sending off multiple requests, but the naming never took that too well into account. For interaction with web-pages and database inspection, such scenarios become essential. I could have renamed the current tests to test steps and introduced a new type of tests, or I could have introduced a new level of scenarios. I wanted to be able to execute a test consisting of just a single step without having to deal with setting up another structure, so the test step naming was not optimal. Furthermore, I wanted to be able to have steps stand on their own and easily re-use them, a feature the test step naming doesn't exhibit to me. Also, scenarios have a specific meaning within testing, and my new composite tests did not completely match that – a scenario is typically just a single execution of the involved steps with a predefined set of values for parameters.

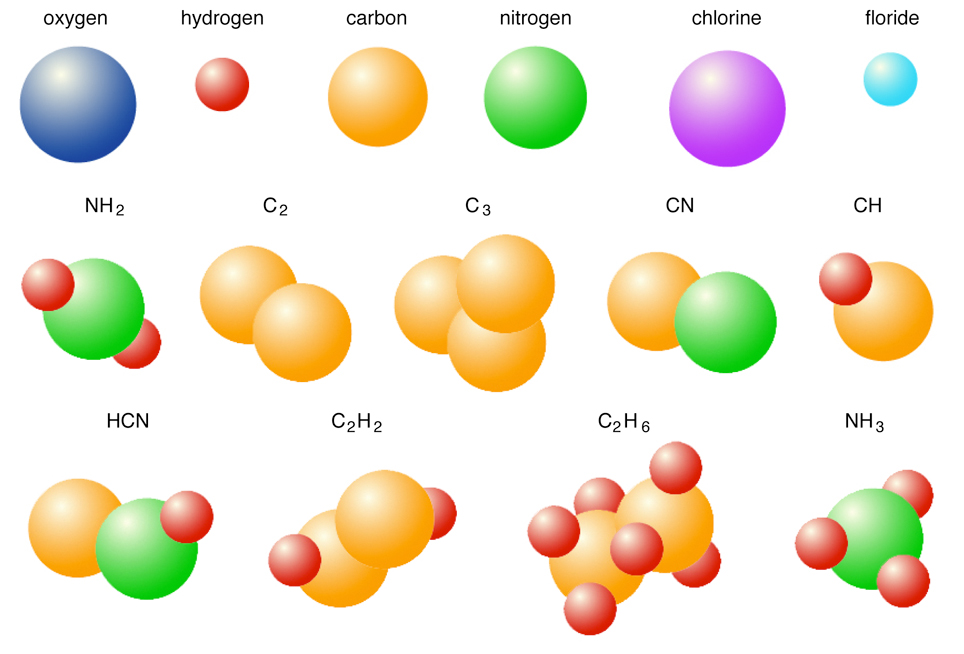

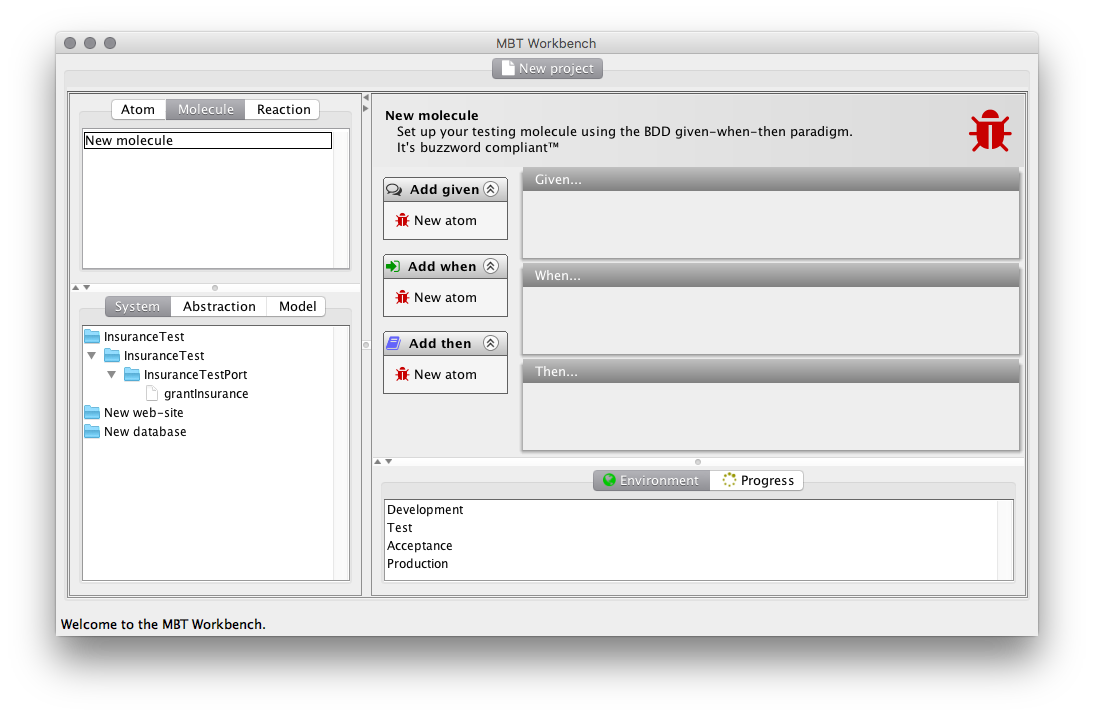

Instead, I decided to invent my own terminology entirely. I wanted to use an analogy that would be reasonably sensible and also it was late in the evening, so I came up with using an analogy from chemistry: the current tests become atoms and the composite new structures molecules. This terminology has the advantage that a single atom can constitute a molecule on its own (for example Neon, Ne), just like some test steps can stand alone. We can refer to steps that cannot stand alone as ions and to slight variations of steps as isotopes. It's all very clever when it is late in the evening.

With this analogy, executions instead become reactions, stressing that they lead to a result (e.g., a report). We can take that further and make full sequences (like stepwise reactions) or general graphs of reactions.

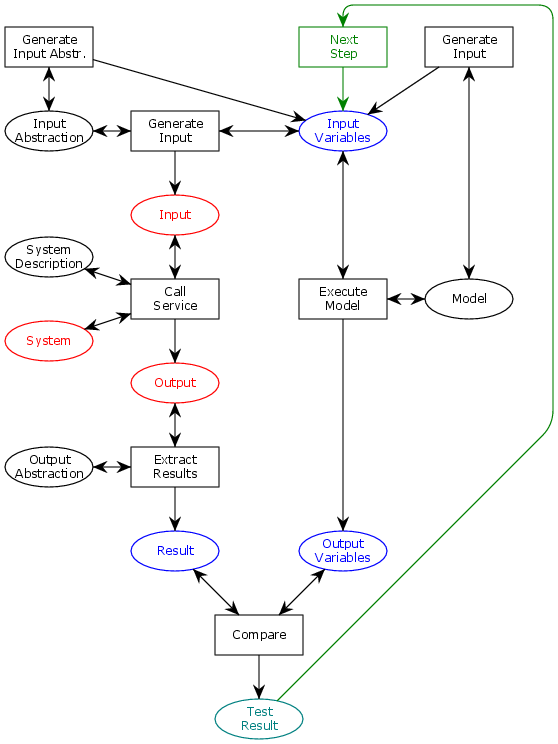

Thus, our new meta-model looks like this:

Aside from renaming, the only new part is that we now have a looping action that is able to feed test results into a next step of the execution. I may elaborate on this part of the meta-model once I get further into the implementation of the functionality.

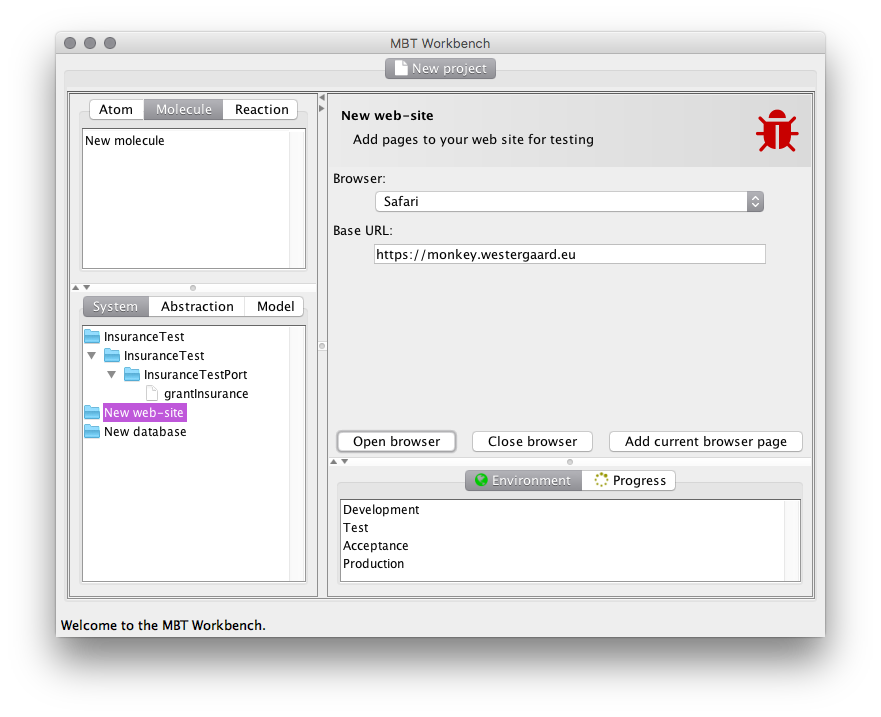

The updated user-interface looks like this

Modeling and Testing Web-pages

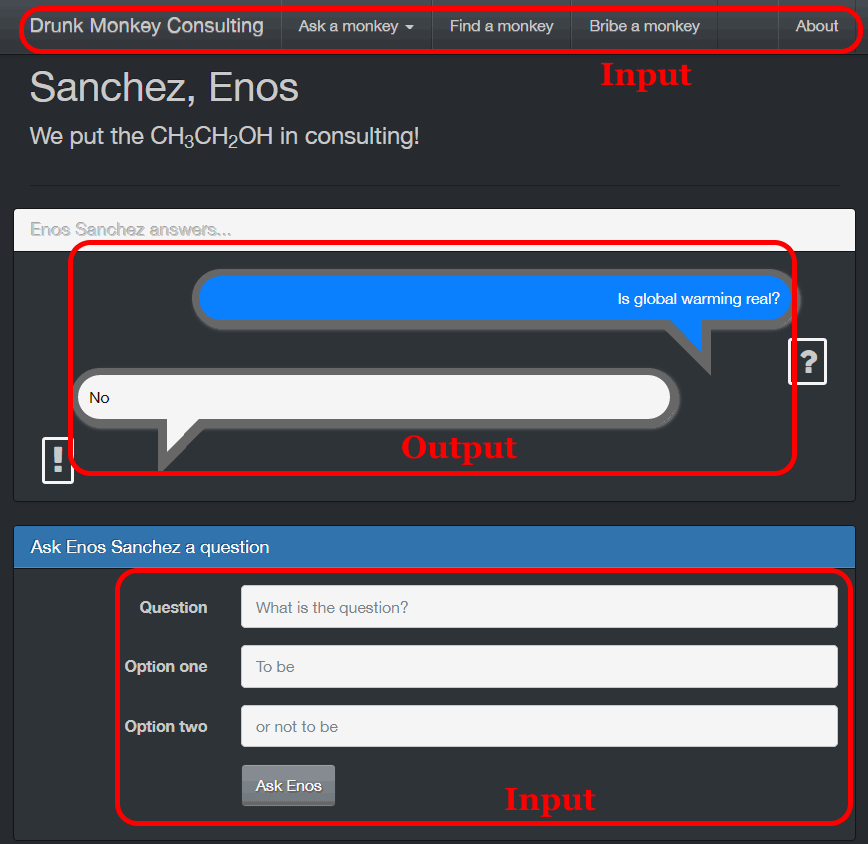

As I indicated previously, I've also become interested in testing web-pages. I want this to fit in with the tool and to take a model-based approach. The idea is to view pages in isolation. When you're on a page, you can fill in some (optionally none) information and perform any of a few actions. This will take you to a page, where you can extract various information.

Viewed like this, web-pages fit well within the framework. When we read data from a web-page, we can think of it as an output abstraction. When we input values into the page, it makes sense to think of it as an input abstraction. This approach fits with how libraries like Serenity handle web-pages: web-pages are represented as objects, and individual elements are identified using XPath expressions but don't distinguish input to the page and output from it like we do.

Tools like Serenity make users set up the mapping between page elements and abstractions manually. This is annoying and provides little support to the user to prevent errors and keep track of coverage. I want the MBT Workbench to scan the page and automatically extract all controls. Essentially, all form elements and hyperlinks get recognized and set up as input variables, and all regular text elements are set up as output variables. Like for the web service abstractions, users can also add their own XPath expressions. It should be possible to generate Java classes that can be loaded directly into Serenity from such atoms, making it possible to automate an annoying part of the classical testing approach.

As mentioned, for web-services it is fine – at least as a first version – to assume idempotent/stateless operations where we just send off a single request. For web-pages, we cannot really do that: websites are inherently interaction-based and require multiple steps. When doing old-fashioned testing, you pretty much make a recipe with a sequence of steps to be executed. I wanted to take model-based testing in this direction, but better. For this reason, I introduce the notion of a molecule to augment the existing atoms.

Molecules in the MBT Workbench are composed of multiple atoms just like in chemistry or like tests are composed of multiple steps. I am not 100% clear on how exactly I want to implement this, just that I want a sufficiently generic meta-model that I can implement several common patterns. My mental model right now is something similar to how model compositions are made using synchronized products. I can unfortunately not really like some easily digestible material about this, but these are concepts within the model-checking community, which despite what you would think is not a community that comes together to stalk runway models.

In the MBT Workbench, I want to compose atoms into molecules by passing output variables of one atom to the input variables to another. I do not want to force this to be sequential, so it would be possible to have

For my first implementation, I am using the BDD pattern (behavior-driven development). It's essentially Hoare logic but

If we have multiple such molecules, they basically induce a concurrent constraint programming system, where each molecule acts as a guarded expression. Together, they are an executable system which can be explored ad-hoc using interpretation or exhaustively using model-checking techniques.

On the model side of things, we do the same: we use a standard synchronized product, where all internal actions are regarded as invisible. We synchronize on the variables, just like we do for the systems, and using the input and output abstractions, we can easily translate the actual values from the execution to model-domain variables. We can analyze the model to derive new scenarios, do guided executions and lots of funny things.

We can test correctness by using the standard simulation relation between the system and the model (if the system can simulate the model it's correct), and even use a bisimulation relation to check completeness (if the systems are bisimilar, all behavior in the system is correct and all behavior in the system is represented using the model).

For web-pages, a lot of this can be represented using a sitemap. As site-map can be generated automatically or semi-automatically by inspection of the pages.

Modeling and Testing Databases

Web-applications will often store their data in an SQL database. In fact, many of the tests I am involved in

I'll be the first to admit that I don't think this gains much from the model-based testing approach. A purely data-based system will naturally gain little from a behavior-driven testing approach like MBT. Rather, the advantage of adding this type of atom (which, running with the chemistry analogy could be denoted element) is in molecules.

In a molecule, we can implement a scenario where we first check some database value, execute a web-service, and then check that the database looks as expected. Or, we can prepare a particular database layout for a test scenario to ensure that also external systems we depend on for testing are in a pre-determined state.

For database atoms, the main part would essentially be stored queries. Input variables would be parameters of the query and output variables would be the resulting values (if any). Something would have to be done if a query doesn't always return a single tuple, but I'll deal with that when I get there.

Conclusion

Adding two new domains to the MBT Workbench has required me to do a bunch of refactoring, mostly at a textual level as the tool was already intended to be formalism and system-agnostic.

As part of this, I'll also need to add functionality hitherto avoided but the simplicity of the tool, namely support for composite tests, where molecules are composed of atoms.

After these changes are made, I'll be able to start supporting model-based testing of websites and checking values in databases.

Altogether, this is a lot of work but takes the MBT Workbench in a very nice direction. I hope to be able to show a bit more of the work I have done in the field of web-page testing once the social sciences loosen their hold on me a bit.