I’m playing around with multiple ideas in the IoT world currently because I’m trying to collect 200 millistarbucks ((A millistarbucks is a measure of hipsterness I developed. It is defined as 1000 millistarbucks is an entire Starbucks full of hipsters on MacBooks.)) of hipster Dictionary. For this I am looking for two things: image pipelining in Camel and an image time series database.

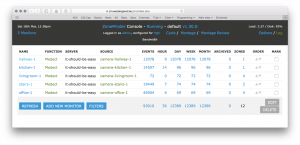

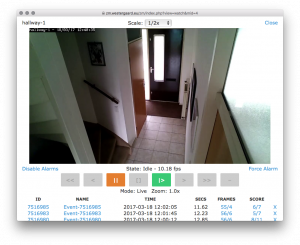

I currently have 3 image-related cases: security cameras, weather camera and web-cams. Security cameras are just kind of there. If I want, I can go and check on their status or view live feeds. If a camera detects motion, it will make and store a recording. I am currently using ZoneMinder for this, which is… ok, I guess? It’s not great. It’s open source and C code and PHP code, and if that doesn’t make you run for the hills, I don’t know what will.

I currently have 3 image-related cases: security cameras, weather camera and web-cams. Security cameras are just kind of there. If I want, I can go and check on their status or view live feeds. If a camera detects motion, it will make and store a recording. I am currently using ZoneMinder for this, which is… ok, I guess? It’s not great. It’s open source and C code and PHP code, and if that doesn’t make you run for the hills, I don’t know what will.

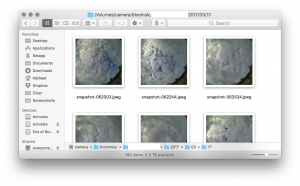

The second case is that my weather camera records images of the sky every 3 minutes during the day. By default, those are uploaded to a cloud service which generates a time-lapse. The cloud service insists on adding big-ass watermarks, though, and also only generates one specific kind of time-lapse and not, e.g., a time-lapse of the same time of every day of the year.

The second case is that my weather camera records images of the sky every 3 minutes during the day. By default, those are uploaded to a cloud service which generates a time-lapse. The cloud service insists on adding big-ass watermarks, though, and also only generates one specific kind of time-lapse and not, e.g., a time-lapse of the same time of every day of the year.

The final case is that I decided to go full-on-hippie and grow my own vegetables. Despite being in the Netherlands, that’s not an euphemism for drugs. In a split-second of brilliance, I decided that putting up a camera in a tribute to watching-grass-grow.com (which seems to be back online!) would amuse me for a brief instant. This would generate a stream of images also ripe for time-lapsing.

All in all, it a lot of cases, where it would be neat to be able to get images in from a source, do some processing (perhaps cleaning them, resizing, cropping, watermarking or whatever) and sending them to a number of destinations. It would also be neat to do image stuff to the images a posteriori when I get an idea. For example, to simply generate a time-lapse with a given interval and granularity.

Back when I worked at Civolution, we did a lot of processing of streams of images. We would do (forensic) invisible image watermarking by processing a stream of images. The watermarking algorithms would do different things to different frames – sone would just be passed thru unaltered, and some would be altered. For the altered frames, we would make copies, pass them thru various filters (low-pass, high-pass, blur, motion detection, scaling up and down, etc.), and merge various images back to one seemingly unaltered image.

Back when I worked at Civolution, we did a lot of processing of streams of images. We would do (forensic) invisible image watermarking by processing a stream of images. The watermarking algorithms would do different things to different frames – sone would just be passed thru unaltered, and some would be altered. For the altered frames, we would make copies, pass them thru various filters (low-pass, high-pass, blur, motion detection, scaling up and down, etc.), and merge various images back to one seemingly unaltered image.

I am currently using Camel for a lot of processing. It is just a simple pipelining language, normally used as part of RedHat’s ESB product JBoss Fuse. It pretty much does the same except for “messages.” Messages are in this context, e.g., web-service requests, tweets, files locally or on ftp-servers, or e-mails. Camel supports a lot of Enterprise Integration Patterns, which much like the Workflow Patterns, essentially are just behavioral design patterns for setting up pipelines.

I am currently using Camel for a lot of processing. It is just a simple pipelining language, normally used as part of RedHat’s ESB product JBoss Fuse. It pretty much does the same except for “messages.” Messages are in this context, e.g., web-service requests, tweets, files locally or on ftp-servers, or e-mails. Camel supports a lot of Enterprise Integration Patterns, which much like the Workflow Patterns, essentially are just behavioral design patterns for setting up pipelines.

With Camel, I already have set up a simple IoT bus, which is currently storing weather data and images from my weather station. It works pretty well. The image storage functionality was added after I wrote the blog post and just stores snapshots as raw files organized by camera id, year, month, and date. I am doing a little manipulation, but really only to filter out empty files (which happens during the night) and to generate the location and filename:

With Camel, I already have set up a simple IoT bus, which is currently storing weather data and images from my weather station. It works pretty well. The image storage functionality was added after I wrote the blog post and just stores snapshots as raw files organized by camera id, year, month, and date. I am doing a little manipulation, but really only to filter out empty files (which happens during the night) and to generate the location and filename:

private static DateTimeFormatter pathFormat = DateTimeFormatter.ofPattern(“yyyy/MM/dd”);

protected void configureSnapshot() {

from(SNAPSHOT).routeId(“snapshot”) //

.convertBodyTo(byte[].class) //

.choice() //

.when(e -> {

final byte[] body = e.getIn().getBody(byte[].class);

return body != null && body.length > 0;

}) //

.process(e -> {

final Instant timestamp = e.getIn().getHeader(PARSED_TIMESTAMP, Instant.class);

final ZonedDateTime localTime = timestamp.atZone(ZoneId.systemDefault());

e.getIn().setHeader(Exchange.FILE_NAME, “snapshot-” + fileFormat.format(localTime) + “.jpeg”);

e.getIn().setHeader(EXTRA_PATH, pathFormat.format(localTime));

}).toD(SNAPSHOT_LOCATION + “/${header.” + PARSED_INFO + “.deviceID}/${header.” + EXTRA_PATH + “}”) //

;

}

[/java]

This works for what we are doing. Just blindly storing the file. It would be neat to do something more and on a high level. For example, images are stored as PNG but it would make sense to store them as JPEG instead to save space. It would also make sense to crop them, as the fish-eye lens really distorts the edges. I would also be able to watermark the images – ass time and date of course, but also a big fat watermark for the version that goes to BloomSky’s own cloud. It would also be neat to be able to blank out portions for anonymity (such as my hobby room window in the bottom right).

All of this is possible with Camel using the Message Translator EIP, but it is not easy, because images are not a first-class data format in Camel. It would be neat if that were the case. Then it would be possible to implement a simple DSL for doing routine transformations.

With images integrated as first-class citizens in Camel, it would furthermore be possible to add data sources and sinks so I could fetch images from the web-service (as I already do), directly from the web-service of my security cameras or from the Plant’s vs. Zombies (just ass zombies) camera I have ordered this morning. I could store them as files (as I do now) or I could send them to a live-stream Youtube channel, instigating the new ultimate trend in Let’s Plays: watching plants grow. I could even use the above image transofmrations to add interesting features like a facecam which is just a static image of my butt. More action-packed than your average Minecraft stream!

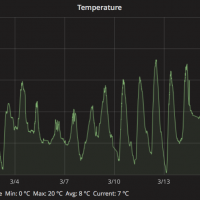

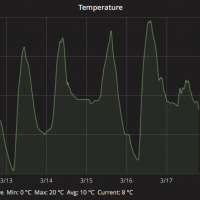

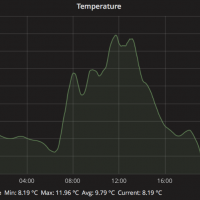

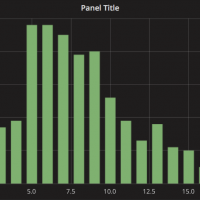

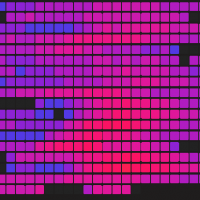

But why stop there? The next thing would be a dedicated image time-series database. I already use a time-series database for storing my weather data. That works really well. I can just dump in raw data and do processing afterwards. For example, I can view the temperature on various scales just by selecting the time frame and granularity, and the database efficiently gives me the values. The database can compute daily averages because it knows the data is a time-series. Here’s 5 visualizations of temperature data. 3 standard graphs showing the tempreature for the last day, week and month. There’s also a heat-map visualizing the temperature at the time of each day for the past month, and a histogram showing how many hours the average temperature was at a certain level (temperature on 1st axis and number of hours on 2nd axis). Using extensions, I can add pretty much any visualization I would like, and can do relatively complex manipulations.

But why stop there? The next thing would be a dedicated image time-series database. I already use a time-series database for storing my weather data. That works really well. I can just dump in raw data and do processing afterwards. For example, I can view the temperature on various scales just by selecting the time frame and granularity, and the database efficiently gives me the values. The database can compute daily averages because it knows the data is a time-series. Here’s 5 visualizations of temperature data. 3 standard graphs showing the tempreature for the last day, week and month. There’s also a heat-map visualizing the temperature at the time of each day for the past month, and a histogram showing how many hours the average temperature was at a certain level (temperature on 1st axis and number of hours on 2nd axis). Using extensions, I can add pretty much any visualization I would like, and can do relatively complex manipulations.

What would be cool were a similar storage for images. I just throw in (streams of) images and the database knows it’s a time series of images. I can extract an interval with a given granularity to view as a movie (use MJPEG or MNG for efficiency but allow export to real formats). Just like InfluxDB allows me to do simple computations and aggregations, allow me to do the same on the images. I am sure we could go a long way with simple convolution filtering of individual images and various kinds of masking/combining of images near one another. Add some standard effects like motion detection, blur, overlay and watermark (pretty much the same I wanted for Camel). Perhaps even complex algorithms like face-detection or recognition (useful for using the security camera for more like smart home applications with personal preferences). Heck, throw in a generic neural network labeling algorithm.

If we add a Camel source towards this new image time-series database, I could just dump the images into the database for off-line processing, and in Camel do what is necessary to do live (motion detection for alarms, face detection for smart home, watermarking for streaming, etc.)

I could do an a posteriori query which would amount to “give me interesting events of today” by combining the database’s knowledge of motion data with time information. ZoneMinder records this information live, which means it has to select the interesting events beforehand, and cannot show a heat-map of motion and allow me to interactively select interesting events a posteriori. Similarly, I could extract a time-lapse by selecting all images for a desired interval with a specific grouping and aggregation.

Dirk suggested pre-computing the values and storing everything in MongoDB. With appropriate indexing, that might kind-of work. If the original images are stored as well, it would be feasible to recompute interesting stats. Expanding on the idea, the picture data could be stored in a “standard” NoSQL database like MongoDB or Cassandra. Computed numerical values would be stored in a time-series database like InfluxDB.

This would kind-of work, but would come with some caveats also encountered with time-series databases based on non-time-aware databases (like OpenTSDB which is based on Hadoop): data could be stored more efficiently if we knew more about it. Storing an image as a BLOB forces a particular image format not necessarily optimized for analysis. Such a format would almost inevitably use more storage than a format able to also exploit inter-frame compression. A database knowing it is dealing with images would also be able to more efficiently cache and re-use computations (e.g., smarter convolution filtering by multiplying kernels or storing various intermediate results often used).