Having written 3 posts about model-based testing, I thought it would be instructional to also include a brief explanation about what the model-based testing approach is all about, in case somebody isn’t 100% up-to-date with decade-old buzzwords of academia.

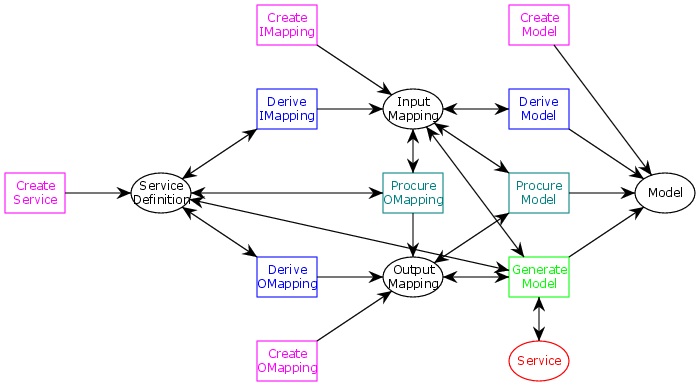

Revisiting the meta-model for the MBT Workbench from this post, I have also added a new red artifact, the actual service implementation. It is important even if it is outside the scope of the tool and a prerequisite to the more automatic approaches.

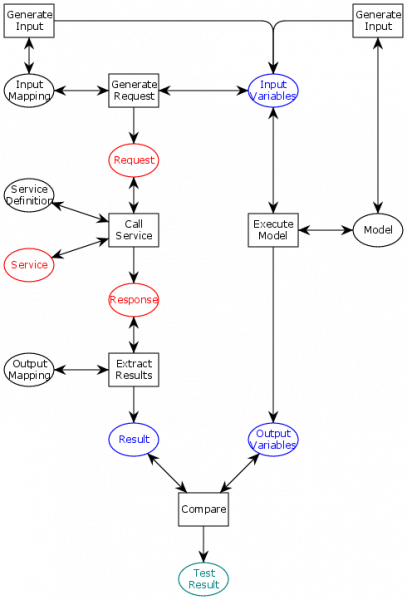

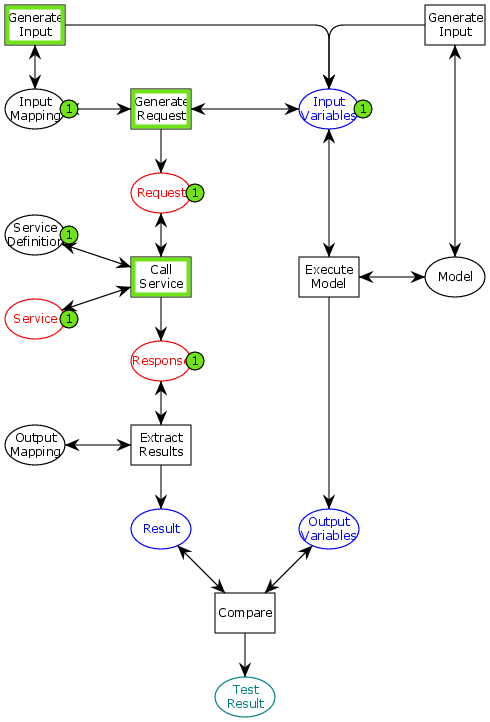

The reason we are generating these 4 (5) artifacts should be evident if we look at this model of implementation of the model-based testing approach:

We again see the 4 artifacts from the MBT Workbench: service definitions, input/output mappings, and models. We also see artifacts in the domain of the model (blue ellipses) and in the domain of the web-service (red ellipses). Finally, we have a teal artifact that is just the test result, which we will not concern ourselves with for now. The actions have not been color-coded as before, as they are all fully-automatic steps which would be that barely-readable green.

Along the right, we see the model-based part of the approach. By supplying a Model with InputVariables, we can Execute the model and obtain Output Variables. The Input Variables can be derived automatically from the model (on an input mapping), because we have made sure that all input variables come from (small) finite domains, so it is viable to try all possible combinations. Thus, the Generate Input task consists of just enumerating the Cartesian product of the domains of all input variables. That’s math-speak for “nested for loops.”

The left-hand side of the figure is a little more interesting as we deal with the real web-service. Here, the first order of business is to Generate Request. The Request is an actual web-service request and is generated by mapping the Input Variables using the Input Mapping. Having a Request, we can consult the Service Definition and Call Service, consulting the real Service implementation to obtain actual Response values. We then use the Output Mapping to Extract Results from the service response.

When both branches have completed, we can Compare the Results with the Output Variables to obtain a pass/fail result to our test. This can produce a more or less detailed test report.

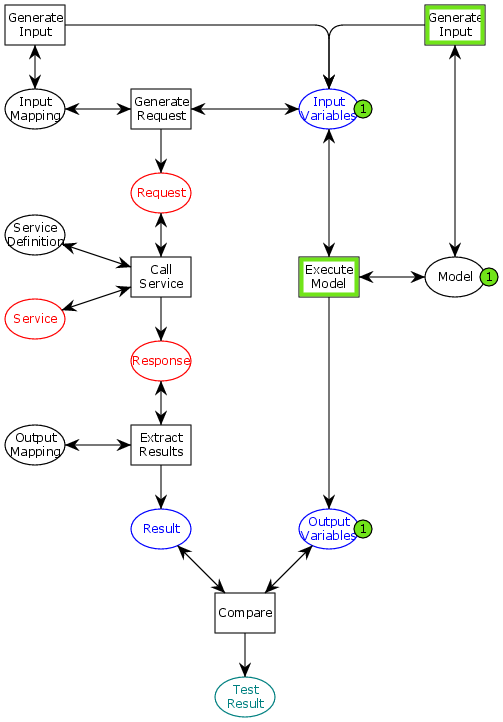

Interestingly, not all (black) artifacts need to be present before parts of the procedure can execute.

For example, if we only have a Model available, we can actually perform the entire right-hand part of the figure. This allows to explore the behavior of the entire system without even having a service description available. This is the result of executing the model with just a Model available (little ones in green circles means there is one copy of the artifact available, and a green outline of an action means it can be executed):

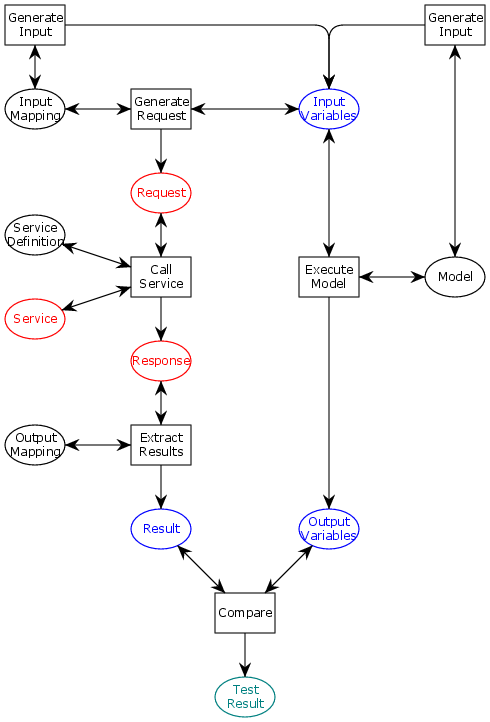

Independently, if we have a service-definition and a service (the normal testing situation), we can use the MBT Workbench to generate the input mapping (as outline in the previous post), and execute this fragment of the entire approach:

Actual Response values in hand, it is easy to see how we very simply can derive an Output Mapping using an approach similar to how we generated the Input Mapping. Output Mapping and Response in hand, can derive Results, which can simply be taken as correct.

This figure illustrates the many facets supported by the MBT Workbench: you can start entirely model-driven, entirely implementation driven, or using approaches in-between to ensure you have derived the 4 artifacts needed to execute the entire method. Everything in this figure is fully-automatic and can be used to support the meta-model underlying the MBT Workbench.